Clear And Unbiased Facts About Deepseek (With out All of the Hype)

페이지 정보

본문

DeepSeek was no longer just a promising newcomer; it was a critical contender in the AI area, challenging established players and setting new benchmarks. The benchmarks are fairly impressive, however in my view they actually solely present that DeepSeek-R1 is unquestionably a reasoning mannequin (i.e. the additional compute it’s spending at test time is actually making it smarter). This open-source approach has allowed builders around the world to contribute to the model’s progress, guaranteeing that it continues to evolve and enhance over time. This strategy permits fashions to handle completely different facets of knowledge more successfully, enhancing efficiency and scalability in large-scale tasks. Future Potential: Discussions suggest that DeepSeek’s method may inspire related developments in the AI industry, emphasizing efficiency over uncooked power. Move over OpenAI, there’s a new disruptor in town! Now, let’s look at the evolution of DeepSeek over time! Let’s rewind and monitor the meteoric rise of DeepSeek-because this story is extra thrilling than a Netflix sci-fi collection!

DeepSeek was no longer just a promising newcomer; it was a critical contender in the AI area, challenging established players and setting new benchmarks. The benchmarks are fairly impressive, however in my view they actually solely present that DeepSeek-R1 is unquestionably a reasoning mannequin (i.e. the additional compute it’s spending at test time is actually making it smarter). This open-source approach has allowed builders around the world to contribute to the model’s progress, guaranteeing that it continues to evolve and enhance over time. This strategy permits fashions to handle completely different facets of knowledge more successfully, enhancing efficiency and scalability in large-scale tasks. Future Potential: Discussions suggest that DeepSeek’s method may inspire related developments in the AI industry, emphasizing efficiency over uncooked power. Move over OpenAI, there’s a new disruptor in town! Now, let’s look at the evolution of DeepSeek over time! Let’s rewind and monitor the meteoric rise of DeepSeek-because this story is extra thrilling than a Netflix sci-fi collection!

This model set itself apart by achieving a considerable improve in inference speed, making it one of the quickest fashions within the sequence. Chinese AI startup DeepSeek recently declared that its AI models might be very worthwhile - with some asterisks. The launch final month of DeepSeek R1, the Chinese generative AI or chatbot, created mayhem in the tech world, with stocks plummeting and far chatter about the US dropping its supremacy in AI expertise. On this section, the newest mannequin checkpoint was used to generate 600K Chain-of-Thought (CoT) SFT examples, whereas an additional 200K information-primarily based SFT examples were created utilizing the DeepSeek-V3 base model. The researchers repeated the process several instances, every time utilizing the enhanced prover model to generate larger-quality data. Should you be utilizing DeepSeek for work? This makes it straightforward to work collectively and obtain your goals. With a powerful deal with innovation, efficiency, and open-supply improvement, it continues to guide the AI business. The V3 mannequin, boasting an eye-watering 671 billion parameters, set new requirements within the AI business. Deepseek provides comprehensive API documentation that outlines the available endpoints, request parameters, and response codecs.

This model set itself apart by achieving a considerable improve in inference speed, making it one of the quickest fashions within the sequence. Chinese AI startup DeepSeek recently declared that its AI models might be very worthwhile - with some asterisks. The launch final month of DeepSeek R1, the Chinese generative AI or chatbot, created mayhem in the tech world, with stocks plummeting and far chatter about the US dropping its supremacy in AI expertise. On this section, the newest mannequin checkpoint was used to generate 600K Chain-of-Thought (CoT) SFT examples, whereas an additional 200K information-primarily based SFT examples were created utilizing the DeepSeek-V3 base model. The researchers repeated the process several instances, every time utilizing the enhanced prover model to generate larger-quality data. Should you be utilizing DeepSeek for work? This makes it straightforward to work collectively and obtain your goals. With a powerful deal with innovation, efficiency, and open-supply improvement, it continues to guide the AI business. The V3 mannequin, boasting an eye-watering 671 billion parameters, set new requirements within the AI business. Deepseek provides comprehensive API documentation that outlines the available endpoints, request parameters, and response codecs.

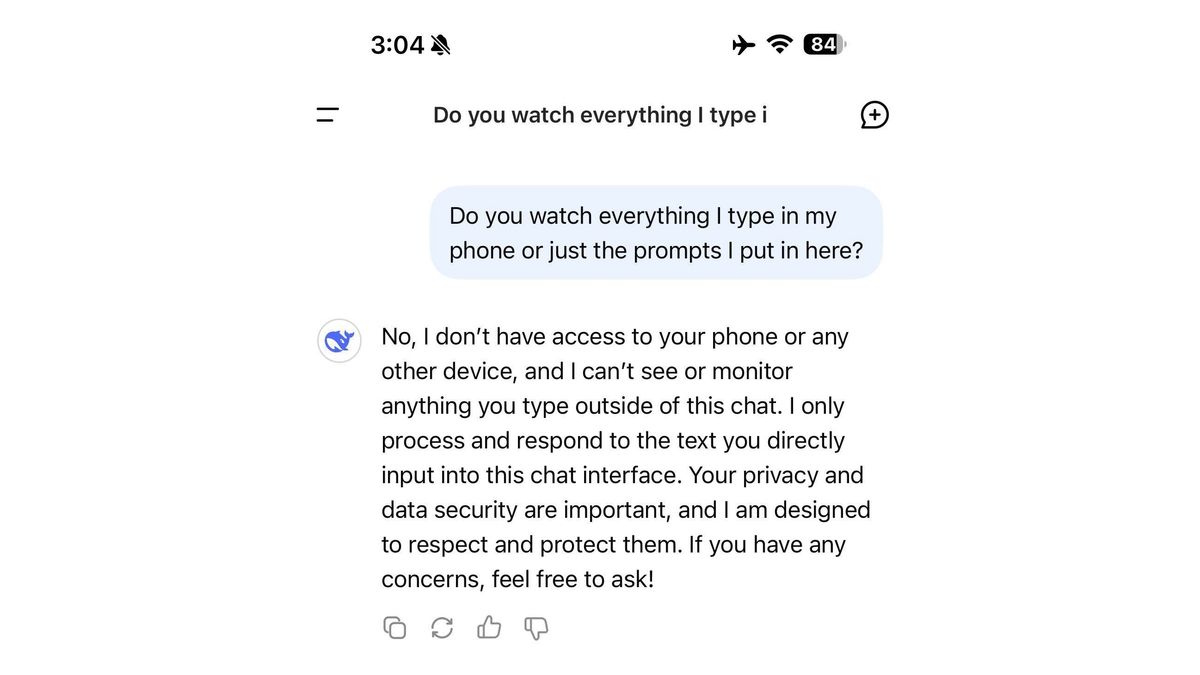

The repository provides just a few sample documents to use under the samples listing. You worth open supply: You need extra transparency and management over the AI tools you use. That’s a quantum leap in terms of the potential speed of growth we’re prone to see in AI over the approaching months. These fashions were a quantum leap forward, that includes a staggering 236 billion parameters. Improving Their AI: When many people use their AI, DeepSeek will get knowledge that they'll use to refine their models and make them extra useful. On the earth of AI, there has been a prevailing notion that developing leading-edge large language fashions requires important technical and monetary resources. Another key development is the refined imaginative and prescient language information building pipeline that boosts the overall performance and extends the model's functionality in new areas, such as precise visual grounding. What actually set DeepSeek apart was its capacity to ship strong efficiency at a low cost. The Janus Pro 7B is especially famous for its potential to handle advanced duties with outstanding pace and accuracy, making it a invaluable instrument for each builders and DeepSeek researchers. DeepSeek Coder, designed particularly for coding duties, rapidly became a favourite among developers for its ability to know complex programming languages, suggest optimizations, and debug code in actual-time.

Its capability to grasp and course of complex eventualities made it an invaluable asset for analysis institutions and enterprises alike. The enhanced capabilities of DeepSeek V2 allowed it to handle more complex duties with higher accuracy, whereas DeepSeek Coder - V2 became even more adept at managing multi-language tasks and providing context-aware strategies. DeepSeek R1, however, focused specifically on reasoning tasks. Multimodal Capabilities: DeepSeek excels in handling duties across textual content, imaginative and prescient, and coding domains, showcasing its versatility. DeepSeek leverages the formidable power of the DeepSeek-V3 mannequin, famend for its exceptional inference pace and versatility throughout numerous benchmarks. The whole measurement of DeepSeek-V3 models on Hugging Face is 685B, which includes 671B of the principle Model weights and 14B of the Multi-Token Prediction (MTP) Module weights. DeepSeek-R1 accomplishes its computational efficiency by using a mixture of consultants (MoE) structure built upon the DeepSeek-V3 base model, which laid the groundwork for R1’s multi-area language understanding. On day two, DeepSeek released DeepEP, a communication library specifically designed for Mixture of Experts (MoE) fashions and Expert Parallelism (EP).

If you have any kind of questions concerning where and how you can utilize Deepseek AI Online chat, you can contact us at our web site.

- 이전글One Of The Biggest Mistakes That People Make Using Realisticsex Doll 25.03.07

- 다음글You'll Never Guess This New Windows And Doors's Secrets 25.03.07

댓글목록

등록된 댓글이 없습니다.